Historical Notes

Spring semester 1999, in RPI’s Graduate Research Seminar class, the topic I researched and presented on was “Applications of Multi-Agent System (MAS) in Transportation System”. My professor’s feedback? “The ideas are creative and enticing, but borderline daydreaming“. The grade I received? B. My dream of multi-agent intelligent system? Shattered.

Fast forward twenty-seven years to 2026, when every conversation on AI can not be carried out without the mandatory mentioning of “Agent”, what has changed?

- Machine Perception fueled by the new computing paradigm deep neural network (DNN). Machine can see, hear and read now.

- Object identification and segmentation from images and videos.

- Voice-to-text transcription.

- …

- Machine “Understanding” fueled by LLM. Machine appears to be able to understand natural language now.

- Machine “Reasoning” emerged from LLM. Machine appears to be able to reason with natural language now.

Since machine perception models enjoys the kind of reliability transistors have, they can be used as lego blocks to build up automation systems the way transistors are used to build up a computer system. But when it comes to machine understanding and reasoning, or LLM which both emerge from, the picture are totally different:

- LLMs are by nature statistical, they hallucinate and are NOT reliable.

- The understanding and reasoning capability emerged from LLM are accidental. They’re merely the by-product of the most-likely flow of natural language.

- If the recent Nature paper “Language is primarily a tool for communication rather than thought” is to be believed, LLM can’t think!

This leads to our conclusions:

- LLM is a slippery foundation to build any system on!

- Multiple LLMs weaved in a system have fifty-fifty chance of converging toward the desired behavior or diverging into chaos.

But the success of OpenClaw and Manus in real-world agentic use cases directly contradicts our cautionary conclusion above. What makes them work? That’s the question se set out to answer.

Agents in numbers

To make sense of the emerging space of intelligent agents, we categorize them into three levels of sophistication, two forms , three roles they play and infinite number of application areas.

Three levels

Disclaimer: I don’t have any experience building agents yet. But just as one can tell an electric car is more advanced than a horse-draws wagon simply from the power source, we can categorize the sophistication of agentic systems by examining the components that constitute it:

- Prompt driven. At the most rudimentary level, the agentic system is constructed solely from a set of textual prompts.

- Engineering driven. One level up, the agentic system features engineering subsystems corresponding to memory, task decomposition, planning, scheduling…

- Science driven. At the highest level, the agentic system is built from scientific principles and methodologies.

Two forms

So far we see two forms of agents:

- Digital. Software defined agents acting in the digital world.

- Physical. Hardware implemented agents acting in the physical world.

Three roles

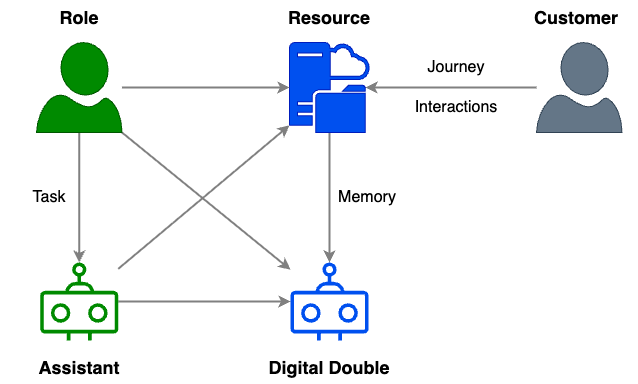

Depend on the roles they play in a digitized enterprise, several agent types and control patterns need to be developed:

- Role oriented agent is called assistant.

- Different agents are developed for different roles, supporting the set of tasks and workflows for a role.

- Resource oriented agent is called digital double.

- A technical support Teams channel could have a digital double that assimilate the knowledge therein and solve problems.

- The business metrics database of retail analytic data platform could have a digital double that generate and execute SQL based on business analyst’s natural language prompt.

- A super high net worth customer Jane Doe could have a digital double that memories all aspects of her life a financial service firm knows of and help the firm’s phone associate with useful insights as Jane calls in.

…

- Low level operations oriented agent is called automaton.

- Automaton actualizes a piece of procedural knowledge of the firm.

- Assistant and digital double delegate subtasks to automaton.

Infinite applications

As man made intelligence, agent will eventually permeate all corners of human life and reach places beyond human can reach. But at its infant age, its success can only be observed in areas that are less stringent and practical. In the order of agent’s success:

- Creative. Anything that’s imaginative.

- Image, video, virtual world generation.

- Performing arts such as dance.

- Programmatic. Anything that can be programmed.

- Dashboard, Website

- Excel spreadsheet

- Descriptive. Anything that can be retrieved and summarized.

- Research report

- Industrial

- Consumer

- …

In summary

Organizing the two dimensions of the agentic space into a table:

Prompted Engineered Scientific

Digital ChatGPT Coding Agents, Personal Agents (OpenClaw), Multi-agent system (Manus) ???

Physical n/a Room cleaning robot, The wheeled LG CLOi GuideBot roaming NC office floor Dancing Humanoid, Industrial Robots

Prompt driven “agent”

If an agent’s behavior is defined solely by a bunch of prompts supplied to LLMs, it’s prompt driven. Since nothing new is added, a prompted “agent” really is nothing but the LLM it’s calling.

Engineering driven agent

An engineered agent is a coordinated system of components mimicking the perceptive and cognitive subsystems of an intelligent being. LLM’s strength is exploited, its shortcomings patched up. Building intelligence out of mere next-word-predictors, useful actions despite of hallucinations, isn’t it the allure of engineering?

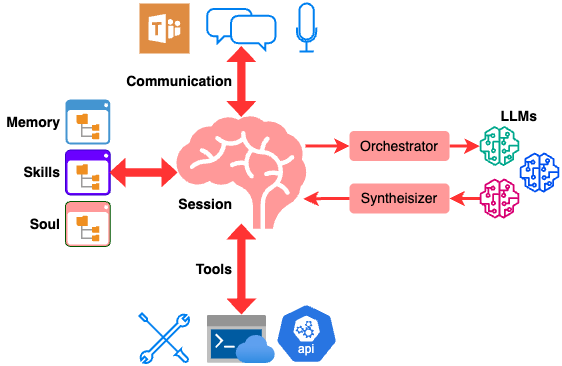

Single agent: OpenClaw

Since OpenClaw is all the rage in the news these days, let’s dissect the lobster. Note how small space the LLMs takes up in the overall system diagram. LLM is the core, yet all the agentic efforts are spent on the rest of the system.

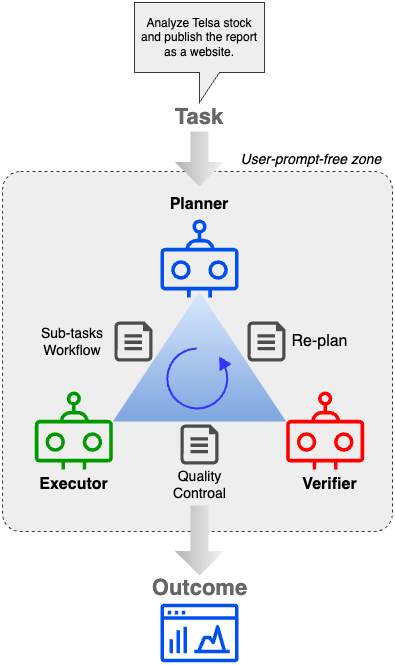

Multi-agent: Manus

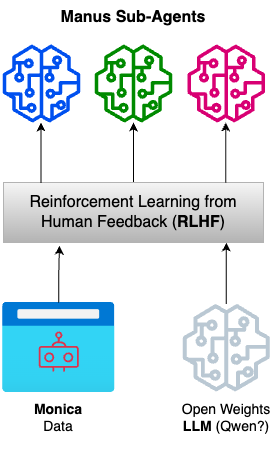

Manus which is bought by Meta brings agentic engineering one level up in the multi-agent realm:

Where are the LLMs? It’s never published but each of Manus’ subagent most likely consists of a LLM post-trained with data collected from Monica, Manus team’s agentic browser plug-in. Manus’ “Less Structure, More Intelligence” motto suggest two things:

- There are structures like those of OpenClaw in Manus.

- They’re trying to generalize the structure while training not one but a fleet of sub-agents post-trained for different domains.

Science driven agent

“Practical men who believe themselves to be quite exempt from any intellectual influence, are usually the slaves of some defunct economist. Madmen in authority, who hear voices in the air, are distilling their frenzy from some academic scribbler of a few years back”

― John Maynard Keynes

Calming down from the excitement and examine what current crops of agents can actually do, a not so exciting picture emerges: the tasks are the simple and boring ones humans don’t want to do, and the results are not quite where human experts are. But when Unitree’s G1 humanoid performed that backflip as part of the choreographed dance routine, it shocked us right into the uncanny valley of eeriness, discomfort and … madness! Robotics, which has traditionally been built on top of automatic control theory, is now reinvigorated by LLM and reinforcement learning. We posit that digital agents, which operate in a much simpler discrete space, will benefit more from these theories.

Automatic Control Agent

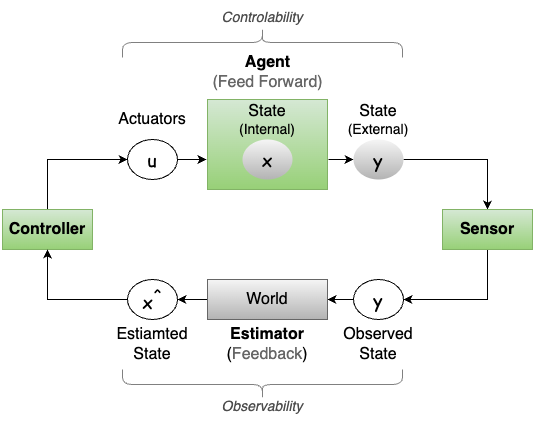

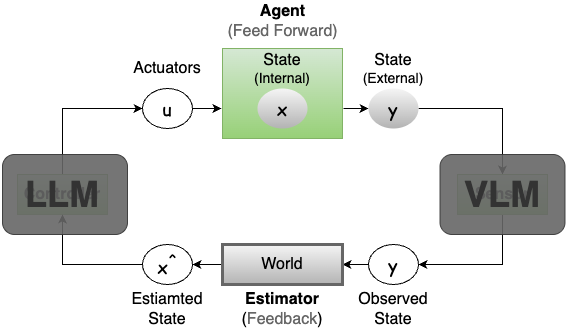

Without delving into details of control theory but following its classic formulation, we cast digital agent into a control system with unobservable internal state and a closed feed forward/backward loop.

The benefits of this formulation of agentic system is the theoretic and experiential richness of the field of automatic control. For example, the concept and practice of observability and controllability can be used to reason and control (pun intended) for the vulnerabilities of data driven paradigm such as reinforcement learning which we’ll talk about next.

Reinforcement Learning Agent

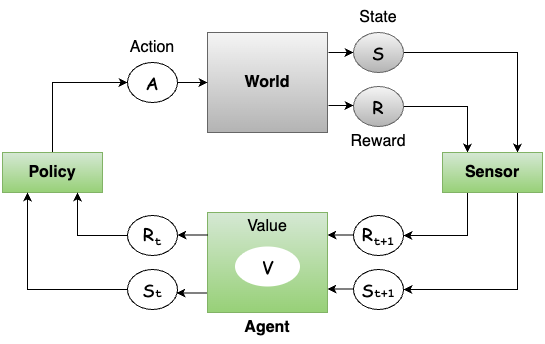

Here is the same digital agent casted into a reinforcement learning system:

The difference between the two formulations is the homework for the motivated readers, but the data driven nature of reinforcement learning needs to be emphasized with a picture:

We urge you to stare at the picture for a while and think:

- How can we empower the hundreds of thousands of a firm’s customer facing associates (think call center employees, advisors) to train their digital agents so effortlessly that they don’t even realize they’re doing so as they provide the insanely good services to their customers?

Why World Models?

Acute readers would have noticed the box labeled as “World” as part of the Automatic Control Agent presented on the Agent tab earlier. It models the internal workings of the agent which can be used to estimate the unobservable internal states of the agent from its external state. The estimated internal state is then used to inform the controller to take the next best action.

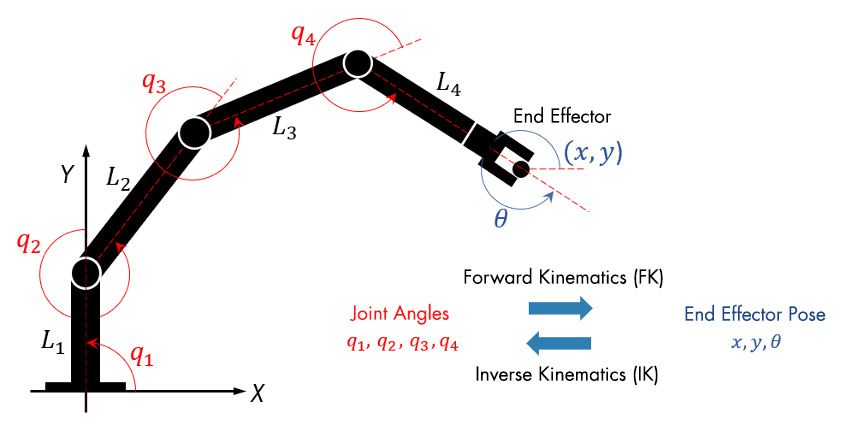

The ideal white box world model

Ideally the internal workings of the agent is so figured out that it can be derived mathematically or calculated numerically. Like what Matlab can do for robotic arms. Given the robotic arm’s final position and angle (external state), it can calculate the angles and torques of each joint (internal state). The inverse kinematics world model of a robotic arm is considered a white box model.

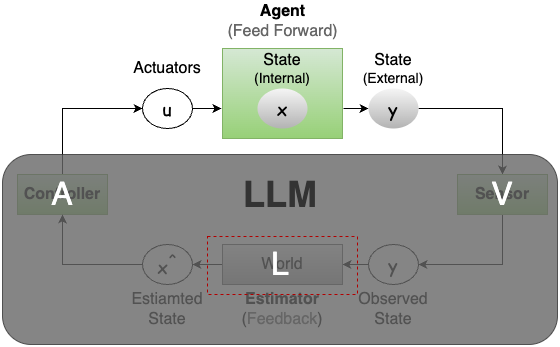

The black box that is LLM

VLA (Vision-Language-Action) has been the leading design paradigm for nowadays humanoids. What it amounts to is replacing the sensor, estimator (world) and the controller of an automatic control agent with a single piece of multi-modal LLM, which is a black box through and through. While it works amazingly most of the time, the downside due to the loss of observability and controllability afforded by white box control systems can not be overestimated. A VLA humanoid may behave 99.999999999999999999999% of the time, but would you want to be near it when it hallucinate at a random time?

Breaking up the black box

The recent emergence of World Model startups, by heavy weights of the AI world, is the attempt to breakup the afore mentioned big LLM black box into smaller black boxes. “They are still all black boxes. Why bother?” you may ask. A couple of reasons come to mind:

- Each smaller black box LLM now gets more narrowed purpose which can be post-trained better.

- A vision language model (VLM) as the sensor.

- A domain specific world LLM or non-LLM as the estimator.

- A reasoning LLM as the controller.

- The observed and estimated states are now visible which helps to better post-train each of the black box LLM.

- The system is now more modular which is always better.

But the ultimate goal is to turn the black box models into white box ones one by one.

Spatial World Model

The simplest world to model is probably the three dimensional one we live in. AI heavy weights are already on it:

- World Labs Marble, 3d Gaussian splatting + rasterization

- Google Genie 3, auto-regressive frame by frame image generation.

Despite the technical differences, neither produces a real 3d model in the sense of those produced by AutoCAD. You may walk around or even look into a cube in the generated world but you can’t ask practical questions such as “what’s the volume of this cube?” Fantasy, entertainment, or even education, sure. Spatial Intelligence as FeiFei Li claims? Not so much.

Physical World Model

Yan LeCun’s dream is even grander, with his proposed JEPA architecture, he wants to learn physical laws such as F = ma by watching countless videos. Good luck with that.

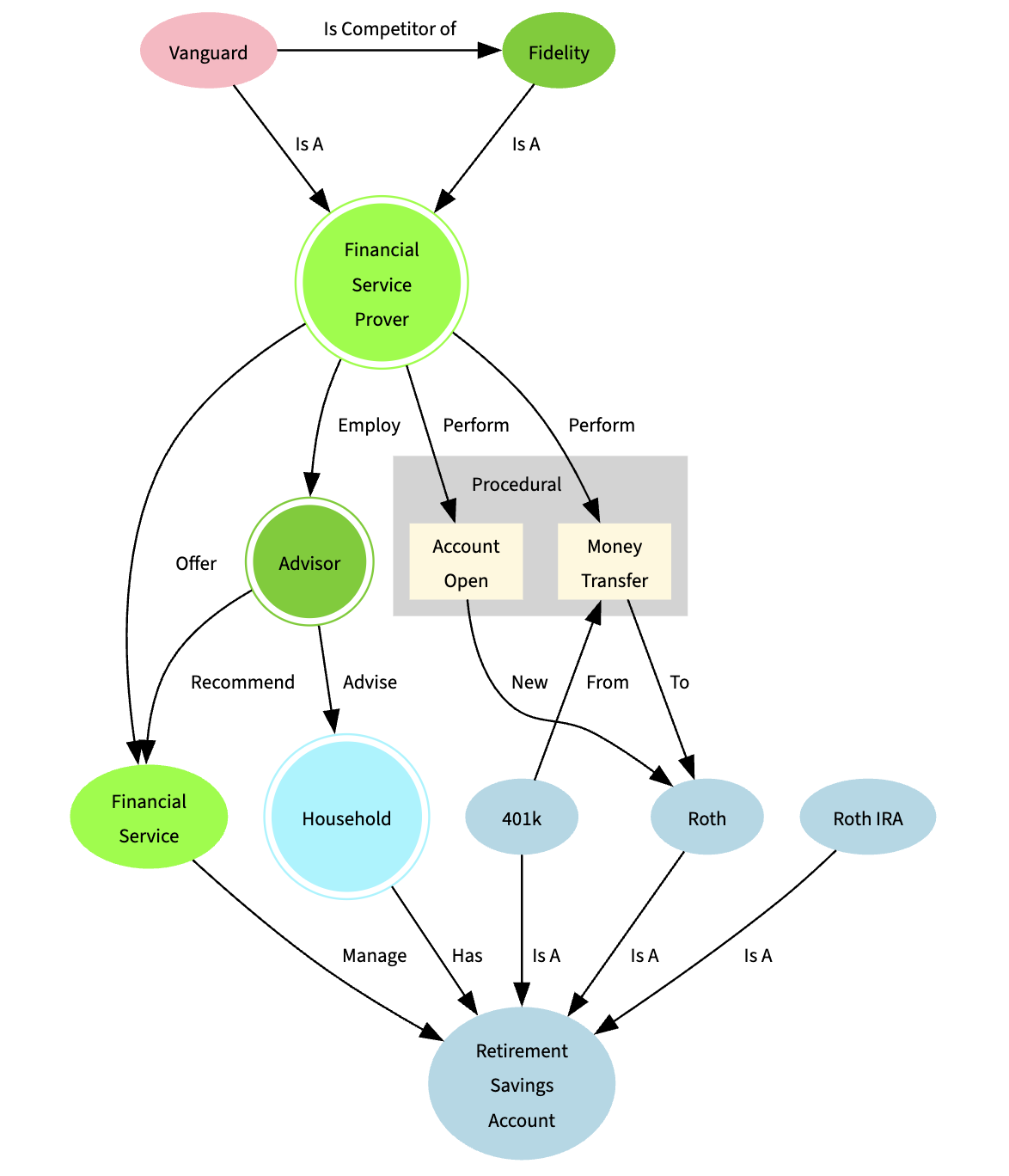

Symbolic World Model

Clear headed about the fact that there are not much to see in neural world models so far, I can’t help entertaining the idea of “back to symbolic” for world models. Ideally an world model is:

- White boxed as an end product.

- White box world model affords the explainability and certainty finance industry must have.

- It must be symbolic and structured:

- Ontology, logic, computational code…

- Cross-sourced during the continuous curation process.

- Symbolic.

- Neural. Neural network based tools such as LLM can be utilized to extract symbolic structures out of unstructured data.

- Manual. Human efforts are indispensable in designing, validating and improving the world artifacts.

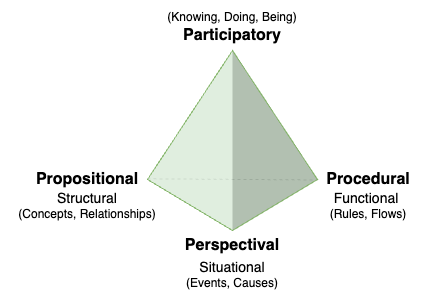

The 4P framework of knowing

John Vervaeke’s 4P framework of knowing can be exapted to the construction of a symbolic World Model.

Ontology based

Ontology and taxonomy can be the symbolic bricks (concepts) and mortars (relationships) to build out the structural and functional organizations of a domain specific (e.g. financial service) World Model.

We’re acutely aware that this formalized approach is hard to scale, but however little we model the domain specific world, we know for sure it won’t hallucinate like LLMs do.

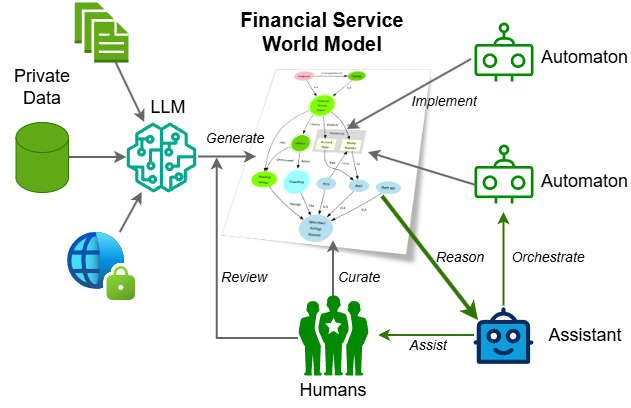

Cross and crowd sourced

LLM’s proper place in the building of the financial service world model is to extract conceptual and procedural knowledge from a firm’s vast sources of private data:

- Help desk question and answer database

- Internal domain knowledge articles

- Customer call transcripts

- …

Humans play the role of reviewers, approvers, auditors and editors of LLM generated as well as human curated knowledge.

Infinitely useful

With the brain of the financial service world model, many novel use cases maybe imagined or reimagined:

- Routine things can be automated

- based on the procedural knowledge in the world model.

- Certain financial advices can be derived

- based on the factual and rules knowledge in the world model.

- An LLM plugged in can do real reasoning

- based on the conceptual and relational knowledge in the world model.

- …